Generative Models can Help Writers without Writing for Them

Note: all other authors are undergraduate students

PDF HAI-GEN Workshop at IUI 2021

Abstract

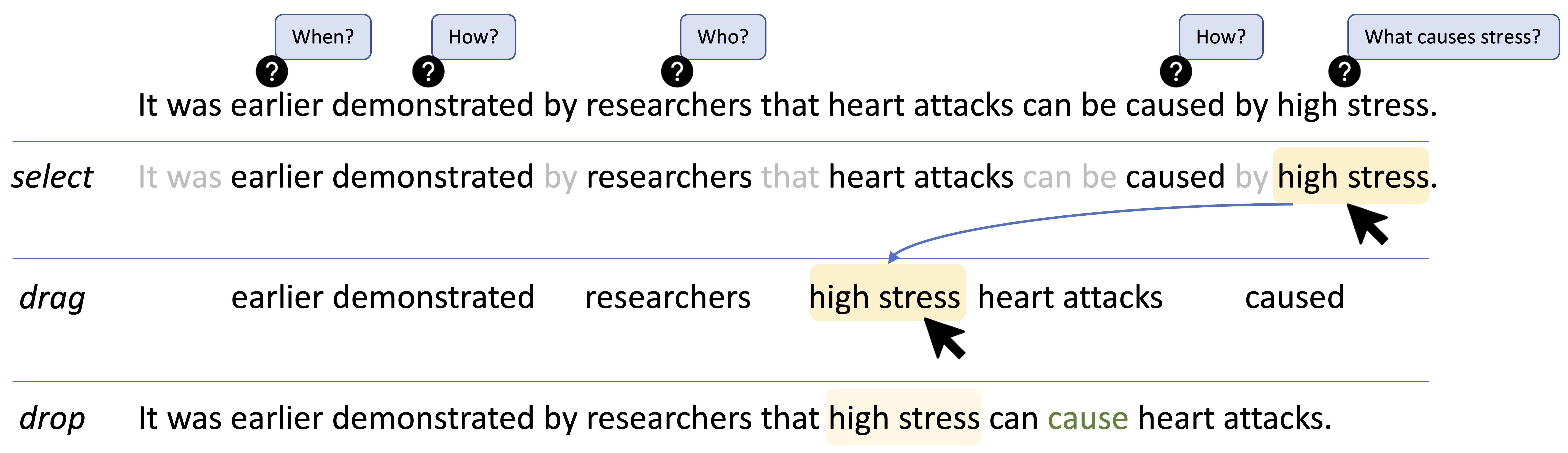

Computational models of language have the exciting potential to help writers generate and express their ideas. Current approaches typically provide their outputs to writers in a way that writers can (and often do) appropriate as their own—giving the system more control than necessary over the final outcome of the writing. We present early explorations of two new types of interactions with generative language models; both share the design goal of keeping the writer in ultimate control while providing generative assistance. One interaction enables new kinds of structural manipulation of already-drafted sentences; it keeps the writer in semantic control by conditioning the output to be a paraphrase of human-provided input. The other interaction enables new kinds of idea exploration by offering questions rather than snippets to writers; it keeps the writer in semantic control by providing its ideas in an open-ended form. We present the results of our early experiments on the feasibility and suitability of these types of interactions.