Sentiment Bias in Predictive Text Recommendations Results in Biased Writing

PDF In GI’18

Note: The authors of this paper, published in CHI 2023, independently replicated our study but with better design, much improved language model, and much larger scale. The basic findings still hold, and the implications are just as concerning. Co-Writing with Opinionated Language Models Affects Users’ Views | Abstract

Abstract

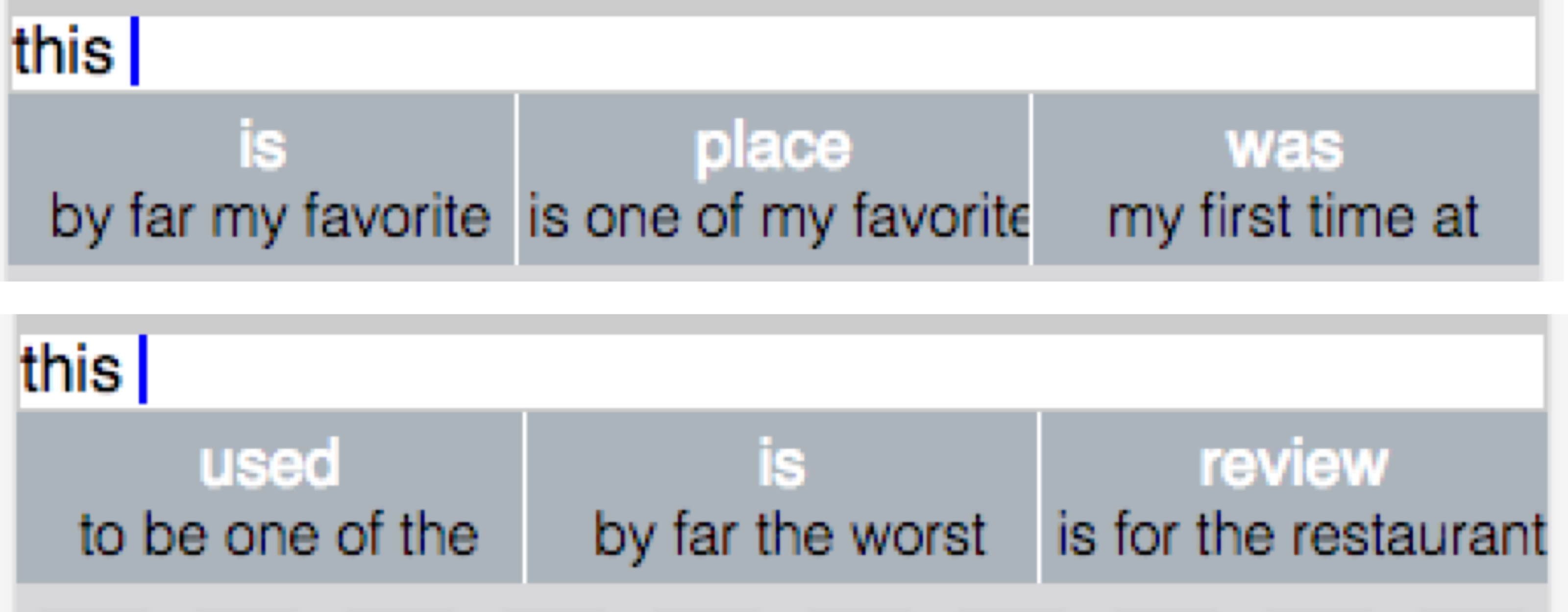

Prior research has demonstrated that intelligent systems make biased decisions because they are trained on biased data. As people increasingly leverage intelligent systems to enhance their productivity and creativity, could system biases affect what people create? We demonstrate that in at least one domain (writing restaurant reviews), biased system behavior leads to biased human behavior: People presented with phrasal text entry shortcuts that were skewed positive wrote more positive reviews than they did when presented with negative-skewed shortcuts. This result contributes to the pertinent debate about the role of intelligent systems in our society.